Human-computer interaction is moving forward faster than ever, and it's changing our relationship with technology in ways that seemed impossible ten years ago. HCI has become crucial as it continues to connect people and technology. Traditional interfaces are giving way to natural, immersive experiences that adapt to human behavior.

Voice AI systems operate at latencies between 500 milliseconds and 1 second, which matches the natural flow of human conversation. AI integration optimizes human-engaged computing in a variety of ways. The path to 2030 shows how AR and VR technologies will take us beyond our familiar screens. These technologies will create environments where experience becomes our primary way to interact. Advanced systems that combine multimodal data processing, artificial intelligence, and affective computing now respond to users' emotions, behaviors, and specific needs.

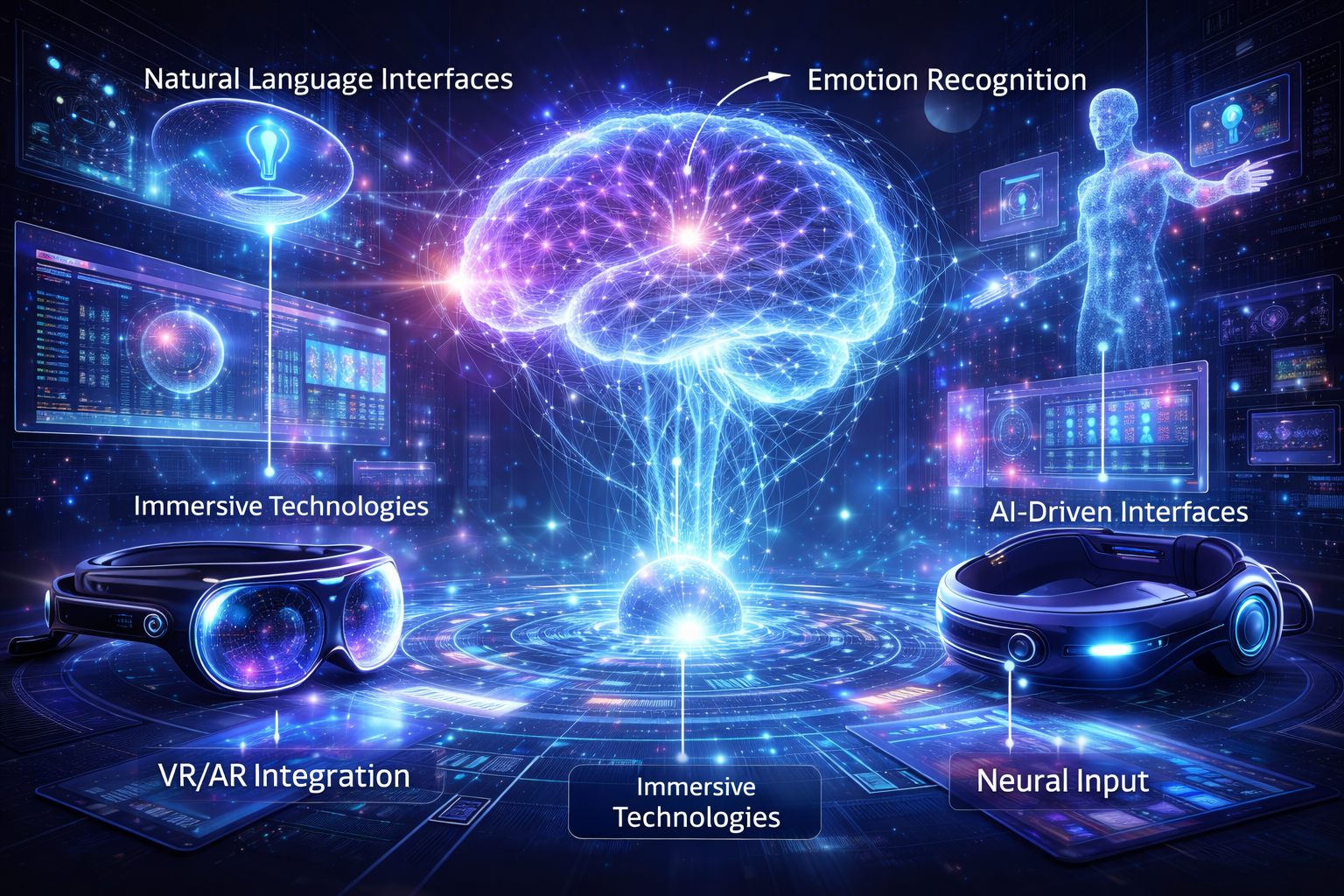

This piece explores what happens when AI-driven interfaces, immersive technologies, and brain-computer interfaces revolutionize our digital interactions by 2030. We'll get into the technological possibilities and what it all means - from today's mobile and multi-screen platforms that just need uninterrupted cross-device experiences to tomorrow's thought-based control systems.

AI-driven interfaces mark a major step forward in our computer interactions. We have moved from manual inputs to cognitive understanding. This move fundamentally changes how humans and machines relate, making technology more user-friendly and responsive to natural behaviors.

Computers now understand and respond to everyday language, which has transformed user experience in many industries. Natural language processing (NLP) combines computational linguistics with machine learning to help computers understand and generate human language.

You can find these interfaces everywhere in daily life:

- Search engines that understand conversational queries

- Digital assistants like Alexa, Siri, and Google Assistant responding to voice commands

- Customer service chatbots handling routine queries

- Language translation services that preserve context and nuance

Natural language data interfaces (NLDIs) have brought a fundamental change in data accessibility. Users can now query databases using conversational language instead of technical commands. This technology proves especially valuable when organizations need to make data access easier for their workforce. Business users can now learn about performance metrics and track trends without knowing database schemas or query syntax.

Digital systems now include emotion detection which creates new ways to improve engagement. Modern adaptive systems can analyze a user's facial expressions through webcams. These systems adjust interaction flows based on emotional states. Educational contexts benefit greatly from this capability. Adaptive Learning Technologies (ALTs) can assess learners' performance immediately and provide feedback that matches their emotional state.

Research has analyzed young learners using math applications. The results show that physiological, experiential, and behavioral measures of emotion work together. These measures are a great way to get insights about emotional responses to feedback. In fact, these multimodal data streams have identified distinct profiles that reflect different emotional responses to system feedback.

AI-driven interfaces have become more context-aware through multimodal data processing. These systems combine audio, visual, and textual inputs to better understand user intent. To cite an instance, see clinical settings where speech-based activity recognition systems identify activities without visual cues or medical devices.

New context-aware multimodal networks solve previous limitations. They capture dependencies between utterances and use attention-based historical conversation interaction. Systems can now retrieve information from past interactions, creating a more natural experience. Context-aware multimodal pretraining also enables representations that work with additional context, showing better few-shot adaptation in tasks of all types.

These cognitive interfaces will evolve more as we approach 2030, creating natural and user-friendly ways for humans and computers to interact.

Immersive technologies blur the lines between physical and digital realities. They create radical alterations in how we interact beyond traditional interfaces. These technologies change how users perceive their environment and create experiences that once existed only in science fiction.

Augmented reality (AR) overlays digital information onto the real world, while virtual reality (VR) takes users to completely simulated environments. These technologies blend with human-engaged computing to turn passive experiences into active participation. Users become more immersed and emotionally connected.

AR/VR integration lets users become part of the story through multi-sensory experiences instead of just watching from the sidelines.

Medical professionals can practice complex surgeries in controlled virtual environments without any risk to patient safety. Architects also benefit as VR lets them walk through virtual models of their designs.

Artificial intelligence boosts virtual environments by making them responsive and individual-specific. AI creates environments that adapt based on user priorities and behaviors. Generative AI builds immersive virtual spaces unique to each user, making every experience different.

AI also gives virtual characters human-like behaviors. They show emotions, learn from interactions, and make their own decisions. This intelligence creates deeper connections between users and digital content.

Mixed reality (MR) connects AR and VR. Users can interact with virtual and physical elements at the same time. Gesture-based navigation has become an easy-to-use interaction method in these environments. Recent MR headsets like HoloLens track hands natively. Users can manipulate digital objects through natural movements.

Hand interactions in MR applications split into two categories: gesture-based interactions and simulated physics-based interactions. Universal standards for gesture design don't exist yet. Each approach targets specific contexts and user needs. Natural interaction methods keep evolving toward experiences that match how we interact with physical objects.

Brain-computer interfaces (BCIs) represent the next frontier in human-computer interaction. These systems create a direct path between neural activity and digital systems. Unlike regular interfaces, BCIs turn brain signals into commands without physical movement, and they could transform our interaction with technology by 2030.

A Electroencephalography (EEG) remains the most practical non-invasive method to capture brain signals. Its portability, accessibility, and lower cost make it stand out from other approaches. Traditional wet electrodes with conductive gel deliver excellent recording quality. However, these electrodes come with everyday challenges - they need careful setup, regular gel addition, and can feel uncomfortable. Recent progress in dry electrode technology and better signal processing makes these systems easier to use while keeping accuracy rates of up to 90% for healthy users.

The mental effort needed to process information, known as cognitive load, plays a vital role in making BCIs work better. Modern systems now use multiple methods to check cognitive states. They combine body signals like brain activity and heart rate changes with behavior patterns. These adaptive interfaces can track and adjust to the user's mental state instantly. When they detect mental fatigue, they change how complex the interface is. Machine learning algorithms, especially signal processing and deep learning methods, make these cognitive load measurements more precise.

BCIs now reach beyond medical uses into entertainment and accessibility. If you have motor disabilities, BCIs let you control games using just brain signals, opening new ways to participate in digital activities. The gaming industry's BCI technology is expected to grow from $1.5 billion in 2022 to $5.2 billion by 2030. This growth comes from increased interest in immersive experiences. Current examples include multiplayer games like BrainNet, where three players use neural signals to play Tetris-like games. BCIs are more than just gaming tools - they give people with conditions like ALS a way to communicate without using muscles.

The rise of advanced HCI technologies brings ethical concerns to the forefront. New combinations of cognitive, physical, and emotional interfaces create challenges that need careful attention.

Emotion recognition systems show clear biases that affect their reliability in a variety of populations. Cultural and regional backgrounds shape how people understand emotions. Systems that learn from region-specific data often fail to accurately read expressions from different cultures. A good example shows how models trained on North American data struggle to understand East Asian expressions. These problems go beyond cultural differences. Many datasets leave out certain groups, creating demographic gaps. So, algorithmic bias leads to unfair classifications that hurt marginalized communities more than others.

BCIs create serious privacy risks by collecting neural signals that reveal our deepest thoughts. These systems can read and decode neural signals without clear permission. This exposes sensitive details about our thoughts, emotions, and subconscious states. Neural data might reveal personal health information from food priorities to medical conditions. Mental privacy faces unique challenges as BCIs and wearables gather "cognitive biometrics." These metrics learn about our cognitive, affective, and conative mental states.

AI systems need transparency at every step of development and use. Users find it hard to trust systems that work like a "black box". This becomes a bigger challenge with generative AI models that are more complex than traditional systems. Explainable AI helps users understand why systems make specific recommendations.

Human-centered AI creates systems that put human needs, satisfaction, and trust first. Guidelines exist but ethical principles often lack practical details for implementation. The EU AI Act, GDPR, and recent executive orders now require AI transparency and accountability. Building responsible AI needs proper safeguards. These include independent reviews, community feedback during development, and open standards.

Human-computer interaction stands at a turning point as we look toward 2030. This piece explores how AI-driven interfaces will change our digital experiences by understanding not just our commands but our intentions and emotions. Natural language processing has already started this move, making technology available to everyone whatever their technical expertise.

More responsive systems will emerge through emotion recognition technology that adapts based on our psychological states. These advances are especially important when you have educational and healthcare applications in mind. AR and VR technologies keep pushing the boundaries between physical and digital worlds and create experiences that let us use multiple senses at once.

The sort of thing I love is how brain-computer interfaces represent the next frontier in HCI. These systems might reach mainstream use by 2030. They offer unmatched opportunities to improve accessibility, but they also raise most important ethical questions about mental privacy.

We need to tackle these ethical implications with the same enthusiasm we show for development. Recognition systems can show bias, neural data collection raises privacy concerns, and AI decision-making needs transparency. On top of that, it needs proper regulatory frameworks that grow with these technologies to keep user-focused principles at the center.

Technology will respond to our thoughts, emotions, and situations, not just our explicit commands. We have a long way to go, but we can build on this progress. This development promises easy-to-use, available, and personalized computing experiences. The move from touch to thought isn't just about technological progress - it fundamentally changes how humans and computers work together in our daily lives.

AI will enable more intuitive and responsive interfaces, incorporating natural language processing, emotion recognition, and context-aware systems. This will allow computers to understand and respond to human intentions and emotions, creating more personalized and adaptive user experiences.

Immersive technologies like AR and VR will create more engaging and interactive experiences. They will blur the line between physical and digital realities, enabling users to interact with virtual environments and objects in natural ways, revolutionizing fields such as healthcare, education, and entertainment.

While BCIs are advancing rapidly, their mainstream adoption by 2030 is still uncertain. However, they show great promise in areas like accessibility and gaming, allowing direct neural communication with computers and potentially enabling thought-based control of devices.

Key ethical concerns include bias in emotion and gesture recognition systems, privacy issues related to brain-computer data collection, the need for transparency in AI-driven interfaces, and the importance of developing human-centric regulatory frameworks for these technologies.

By 2030, computing experiences will become more seamless and integrated into our daily lives. We can expect more ambient and ubiquitous computing, with devices that understand context, adapt to user behavior, and operate with minimal human intervention, creating a more intuitive and personalized technological environment.

Neural Interaction Research

https://www.sciencedirect.com/science/article/pii/S0896627324006524

Multimodal HCI Systems

https://ieeexplore.ieee.org/abstract/document/11200798/

AI Interaction Models

https://www.sciencedirect.com/science/article/pii/S0959475225001161

Human-AI Cognition

https://pmc.ncbi.nlm.nih.gov/articles/PMC10501506/

Context-Aware Multimodality

https://openaccess.thecvf.com/content/CVPR2025/papers/Roth_Context-Aware_Multimodal_Pretraining_CVPR_2025_paper.pdf

Immersive Technology Primer

https://www.adalovelaceinstitute.org/resource/immersive-technologies-explainer/

Virtual Reality Interfaces

https://www.frontiersin.org/journals/virtual-reality/articles/10.3389/frvir.2023.1171230/full

Brain Computer Interfaces

https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3000190

BCI Market Risks

https://www.weforum.org/stories/2024/06/the-brain-computer-interface-market-is-growing-but-what-are-the-risks/

Neurotechnology Privacy

https://www.newamerica.org/future-security/reports/the-rise-of-neurotech-and-the-risks-for-our-brain-data/privacy-and-security-challenges/

BCI Privacy Ethics

https://fpf.org/blog/brain-computer-interfaces-privacy-and-ethical-considerations-for-the-connected-mind/

Trustworthy AI Design

https://www.uxmatters.com/mt/archives/2025/04/designing-ai-user-interfaces-that-foster-trust-and-transparency.php